Optimize the hardware and software in accordance with your evaluation.By default, Autostitch sets the panorama size to 2048x1024px. Determine the impact on cluster area, performance, and timing. Create a simple demonstrator showcasing the mechanism. Can you demonstrate good results in both reactive (i.e.  Experiment with page replacement approaches and determine which is most appropriate. Write the paging code for the DMA core:. An minimal interface to the DMA core to signal page faults and their handling. A translation stage in the scratchpad interconnect checking page indices against a TLB-like structure. Design the hardware enabling paging support:. The implementation steps would be as follows: When a worker core uses paging and requests a missing page, the scratchpad blocks the access until the page is available the necessary page replacement be done fully in software on the DMA core. We propose to determine, using minimal hardware, whether the page requested in each access is present in the cluster's scratchpad or not. Depending on the DMA core code, this can enable regular data movement, caching, and even prefetching with the same hardware. With minimal hardware support for page translation, we can use our programmable DMA to implement paging in the cluster scratchpad, possibly without full virtual memory support. What we are missing in the current cluster is a paging mechanism, similar to those in virtual memory systems, which makes memory accesses to a larger memory space transparent to worker cores. However, the worker cores must still synchronize with the DMA core when data is replaced this is fine for most double-buffered data movement schemes, but not ideal for other applications. Ideally, the worker cores are unaware of the data movement and scheduling in the DMA core, and the two only communicate through thread barriers and semaphores. In many applications, it simplifies the programming model by separating computation and data movement. This paradigm opens up entirely new possibilities for efficient computation scheduling.

Experiment with page replacement approaches and determine which is most appropriate. Write the paging code for the DMA core:. An minimal interface to the DMA core to signal page faults and their handling. A translation stage in the scratchpad interconnect checking page indices against a TLB-like structure. Design the hardware enabling paging support:. The implementation steps would be as follows: When a worker core uses paging and requests a missing page, the scratchpad blocks the access until the page is available the necessary page replacement be done fully in software on the DMA core. We propose to determine, using minimal hardware, whether the page requested in each access is present in the cluster's scratchpad or not. Depending on the DMA core code, this can enable regular data movement, caching, and even prefetching with the same hardware. With minimal hardware support for page translation, we can use our programmable DMA to implement paging in the cluster scratchpad, possibly without full virtual memory support. What we are missing in the current cluster is a paging mechanism, similar to those in virtual memory systems, which makes memory accesses to a larger memory space transparent to worker cores. However, the worker cores must still synchronize with the DMA core when data is replaced this is fine for most double-buffered data movement schemes, but not ideal for other applications. Ideally, the worker cores are unaware of the data movement and scheduling in the DMA core, and the two only communicate through thread barriers and semaphores. In many applications, it simplifies the programming model by separating computation and data movement. This paradigm opens up entirely new possibilities for efficient computation scheduling.

This DMA is also controlled by a Snitch core, making the cluster’s data movement fully programmable. To provide its worker cores with data, the cluster includes a large-throughput DMA that moves data between its tightly-coupled scratchpad and external memory. This multicore cluster couples tiny RISC-V Snitch cores with large double-precision FPUs and utilization-boosting extensions to maximize the area and energy spent on useful computation.

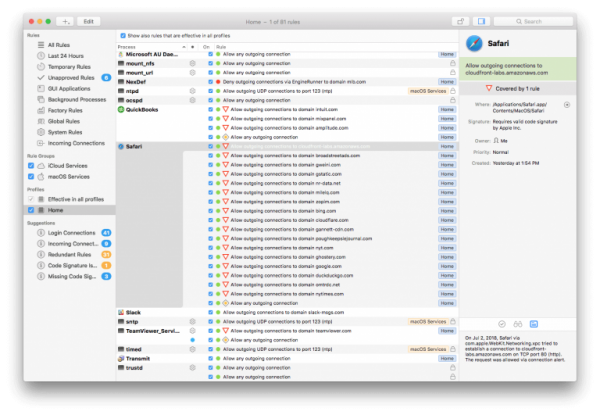

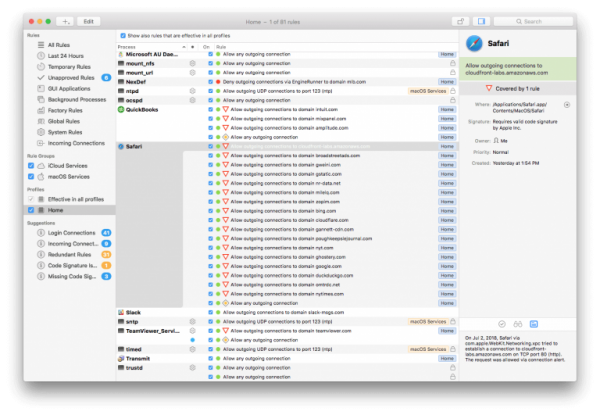

Much of our work on high-performance systems uses the Snitch cluster. Block diagram of the Snitch cluster the DMA core is CC N+1.

0 kommentar(er)

0 kommentar(er)